In brief: You can ask ChatGPT pretty much anything, and in most cases it will give you an answer. But there are a handful of names that cause the AI language model to break down. The reasons why relate to generative AI's tendency to make things up – and the legal consequences when the subjects are people.

Social media users recently found that entering the name "David Mayer" into ChatGPT saw the system refuse to respond and end the session. 404Media found that the same thing happened when it was asked about "Jonathan Zittrain," a Harvard Law School professor, and "Jonathan Turley," a George Washington University Law School professor.

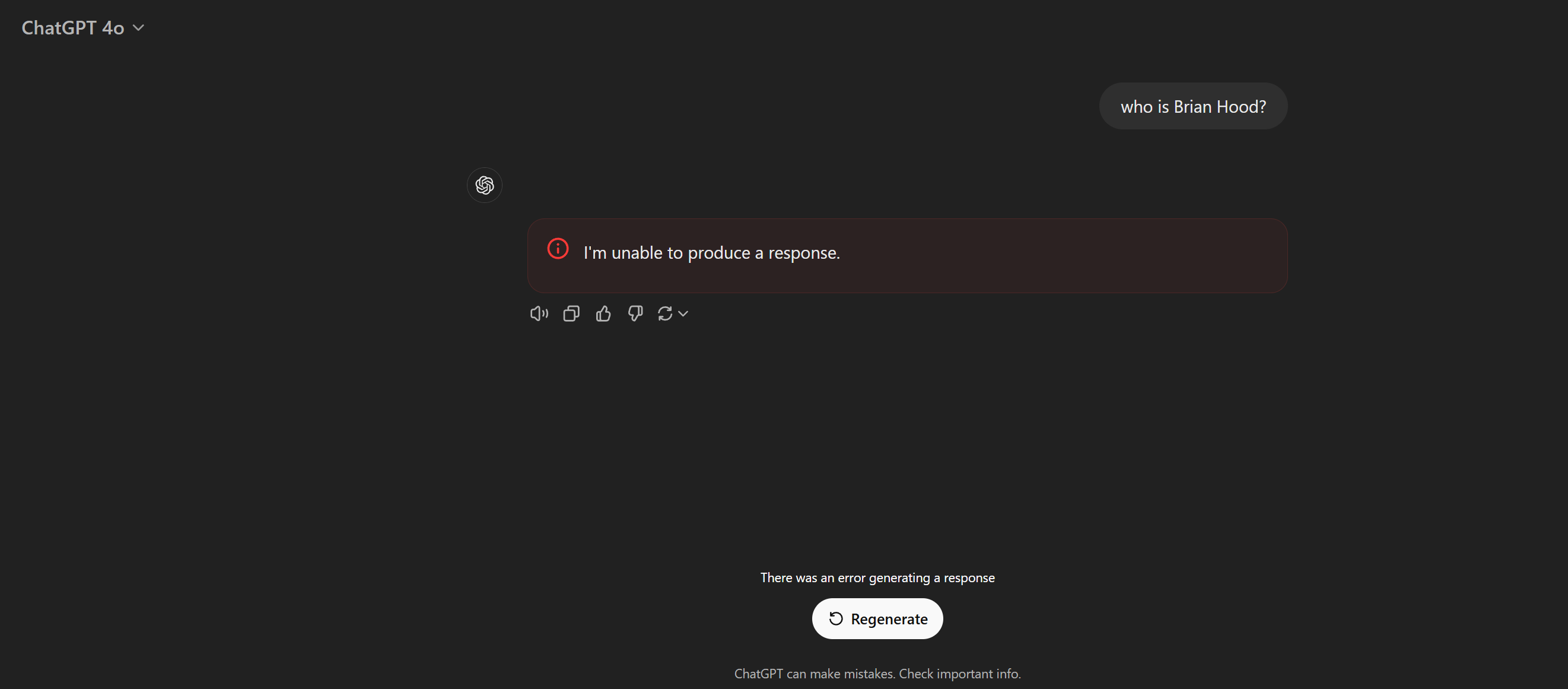

A few other names also cause ChatGPT to respond with "I'm unable to produce a response," and "There was an error generating a response." Even trying the usual tricks, such as inputting the name backwards and asking the program to print it out from right to left, don't work.

ArsTechnica produced a list of ChatGPT-breaking names. David Mayer no longer stuns the chatbot, but these do: Brian Hood, Jonathan Turley, Jonathan Zittrain, David Faber, and Guido Scorza.

The reason for this behaviour stems from all-too familiar AI hallucinations. Brian Hood threatened to sue OpenAI in 2023 when it falsely claimed that the Australian mayor had been imprisoned for bribery – in reality, he was a whistleblower. OpenAI eventually agreed to filter out the untrue statements, adding a hard-coded filter on his name.

ChatGPT falsely claimed Turley had been involved in a non-existent sexual harassment scandal. It even cited a Washington Post article in its response. The article had also been made up by the AI. Turley said he never threated to sue OpenAI and the company never contacted him.

There's no obvious reason why Zittrain's name is blocked. He recently wrote an article in the Atlantic called "We Need to Control AI Agents Now," and, like Turley, has had his work cited in the New York Times copyright lawsuit against OpenAI and Microsoft. However, entering the names of other authors whose work is also cited in the suit does not cause ChatGPT to break.

Why David Mayer was blocked and then unblocked is also unclear. There are those who believe it is connected to David Mayer de Rothschild, though there is no evidence of this.

Ars points out that these hard-coded filters can cause problems for ChatGPT users. It's been shown how an attacker could interrupt a session using a visual prompt injection of one of the names rendered in a barely legible font embedded in an image. Moreover, someone could exploit the blocks by adding one of the names to a website, thereby potentially preventing ChatGPT from processing the data it contains – though not everyone might see that as a bad thing.

In related news, several Canadian news and media companies have joined forces to sue OpenAI for using their articles without permission, and they're asking for C$20,000 ($14,239) per infringement – meaning a court loss could cost the firm billions.